The core concept of an extensible framework for defining available endpoints/tools is something I can agree with. The implementation of having multiple Node.js or Python servers running on my laptop is not something I can support.

Lets define what we actually need

- List of available endpoints/tools and a description

- A list of credentials along with examples of how to exchange those for access

- A few examples of how to use the endpoints so that we can inject them as few shot context

None of these things need to be an actual runtime and could simply be a downloadable spec that clients like Cursor could easily query from a CDN. A MCP client could then expose the necessary credential configuration dynamically and load the few shot examples along with the endpoint registry into context.

Now you might say, Peyton, MCPs allow us to define multi-step actions at the client level.

You provide building blocks not structures. If you have an operation that consists of multiple REST calls, maybe you should simply expose a single REST call that combines this logic and expose that endpoint via your MCP spec.

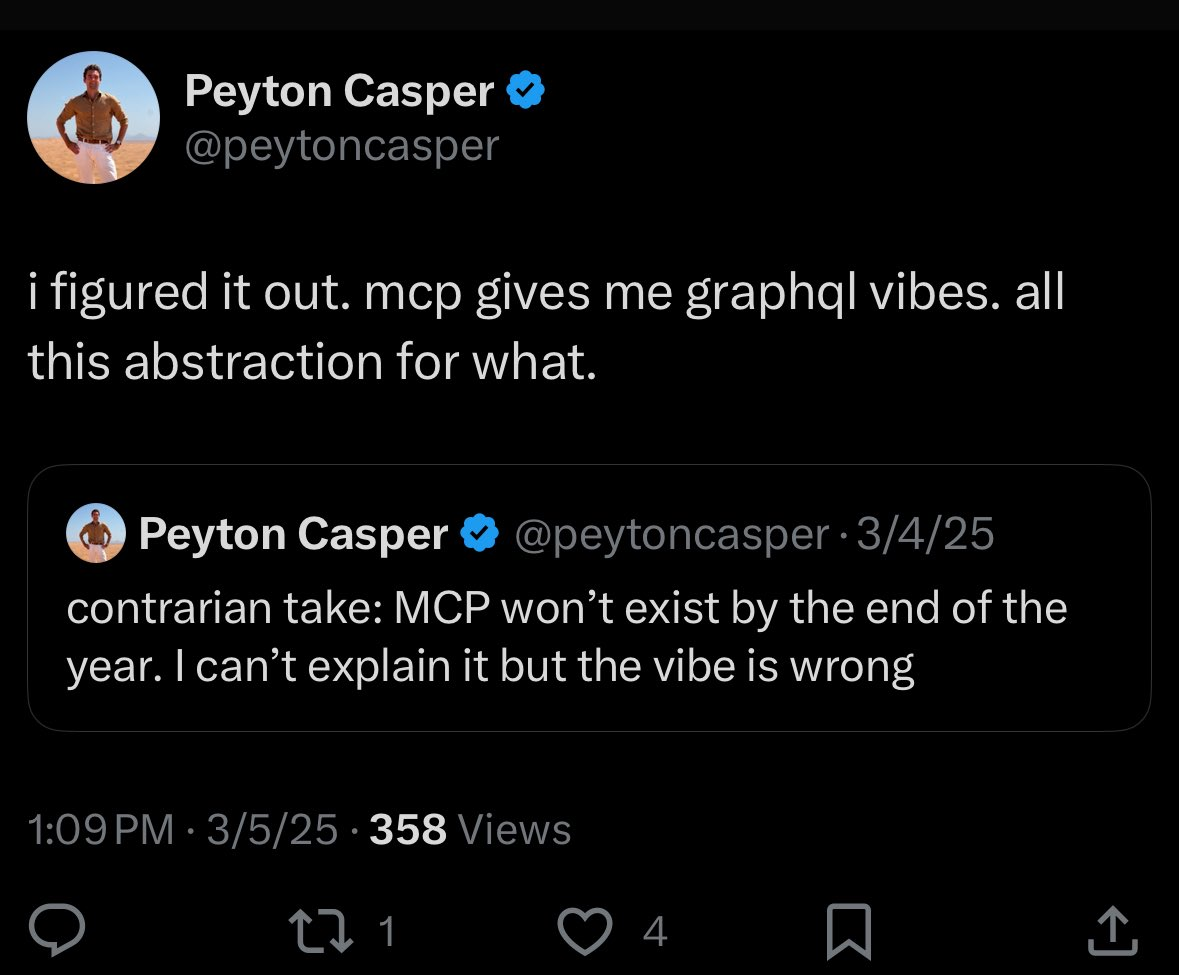

I am not convinced that MCP is the way, and I will still continue using terminal tool calling within Cursor, because I don't believe MCP has solved any real challenges other than providing busy work for product teams.

A true Agent-to-Agent protocol will appear, but I believe that we still have foundational challenges to solve with agent reliability before that becomes a requirement.

The one lesson I have learned about OSS protocols is that they exist solely on momentum AND being in the right place at the right time. One without the other means it's bound to be recreated. MCPs clearly have momentum, but I don't believe that tool calling accuracy is at the level needed for it to be the right place at the right time.